I just read the Walrus Foundation’s blog post from January 22, 2026 — “Bad Data Costs Billions. Verifiability is the Answer” — and it nailed exactly why I’ve been using Walrus more and more for my own projects. The numbers hit hard: 87% of AI projects flop before production because of crappy data, adtech wastes a third of its $750B yearly spend on fraud and unverified impressions, even Amazon had to ditch their AI recruiting tool due to biased training data. Bad data isn’t just a glitch — it’s a billion-dollar killer, and Walrus is stepping in with verifiable storage that makes it fixable.

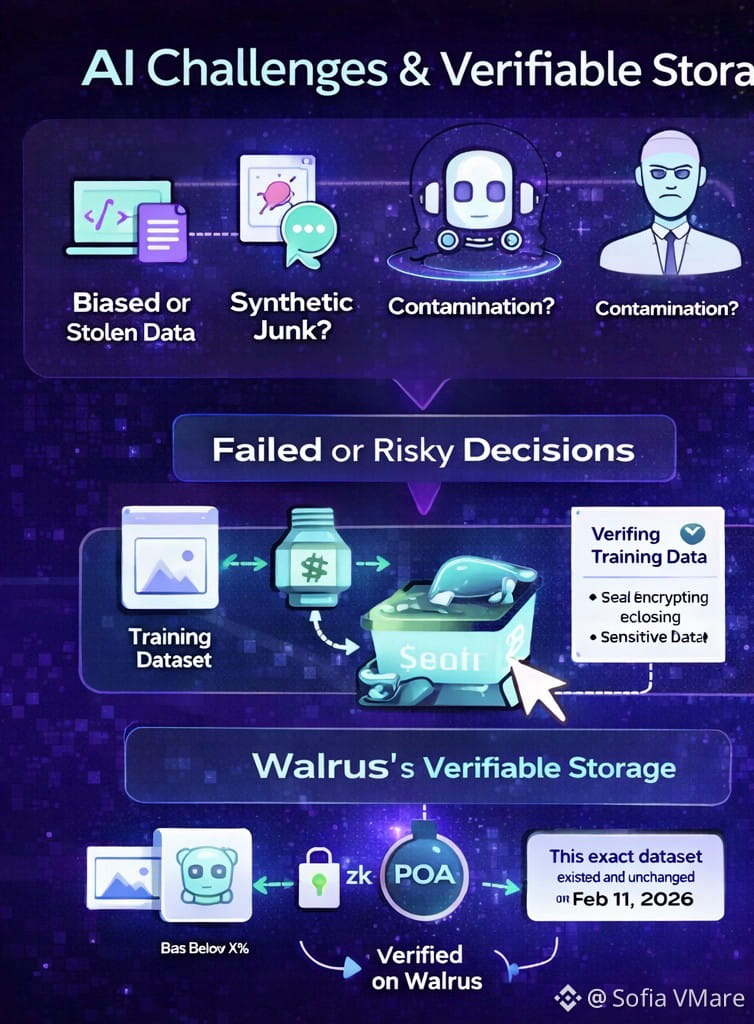

I’ve felt the pain myself. Last year I tried fine-tuning a small language model on my freelance writing samples and some public texts. When I showed it to a potential client, they asked “how do I know this wasn’t trained on stolen or synthetic junk?” I had no solid answer — just “I cleaned it myself.” That’s the core issue the post highlights: without provenance, you’re guessing. You can’t prove the data’s origin, cleanliness, or lack of poison. For high-stakes stuff like hiring, medical diagnoses, or ad campaigns, guessing means massive losses.

Walrus changes that for me. Every file I upload becomes a blob with a unique ID and Proof of Availability (PoA) certificate on Sui — an on-chain stamp saying “this exact dataset existed, was retrievable, and unchanged on February 11, 2026.” I can share the blob ID and PoA to prove “here’s the raw training data I used.” No trust needed — the chain verifies it. I tested it with a 5 GB fine-tune snapshot: uploaded, ran a zk-proof via Nautilus to confirm “trained only on this curated set,” verified on Sui. Felt like having actual evidence instead of hoping.

The post spotlights Alkimi using Walrus for adtech — storing impressions, bids, transactions as tamper-proof blobs. Seal encryption protects client info, zk-proofs (Nautilus) let them reconcile accurately without leaks. Advertisers get proof their money wasn’t wasted on bots. I use similar logic for my freelance deliverables: store project files as blobs — if a client disputes, I share PoA for instant proof. No faked screenshots.

For AI, it’s bigger. Amazon scrapped their tool because biased data had no audit trail. With Walrus + Nautilus, store encrypted datasets, run zk-proofs for “bias below X%,” publish the proof publicly. Data stays private, verification is open. DeSci, personal AI, ad revenue tokenization (AdFi) are building on this.

Economics make sense too. 50 GB dataset on Walrus is ~$0.05/month (4–5x replication vs 20–100x elsewhere). $WAL for fees, nodes stake $WAL to host verifiable blobs and earn rewards. As verifiable data becomes mandatory (EU AI Act, US regs), $WAL captures value from every layer — storage, proof, verification.

Walrus Foundation is pushing this — RFP grants fund zk-tooling, provenance SDKs, fair-training templates. Alkimi is the start; I see DeSci, my own AI tests, even ad fraud prevention using it.

In 2026, when bad data costs trillions and trust is scarce, Walrus isn’t storage — it’s the trust layer. If you work with data (AI training, ad metrics, records, research), try uploading a dataset to Walrus. Run a simple zk-proof, verify on Sui. You’ll feel the shift: data becomes provable, trustworthy, yours.

That’s not hype — it’s infrastructure fixing a trillion-dollar problem, one verifiable blob at a time.