Google announced numerous new developments in the Android XR ecosystem during "The Android Show: XR Edition" held this morning on 12/9, including significant updates to the Galaxy XR head-mounted device and the first public unveiling of next-generation XR and AI glasses prototypes created by partners.

Galaxy XR multiple updates: PC connection, travel mode, and Likeness launched.

Google stated that new features for the Galaxy XR head-mounted device will be gradually pushed out starting from 12/9, allowing users to more naturally integrate XR into their daily work and life.

PC Connect: Bring Windows and Google Play apps into XR space.

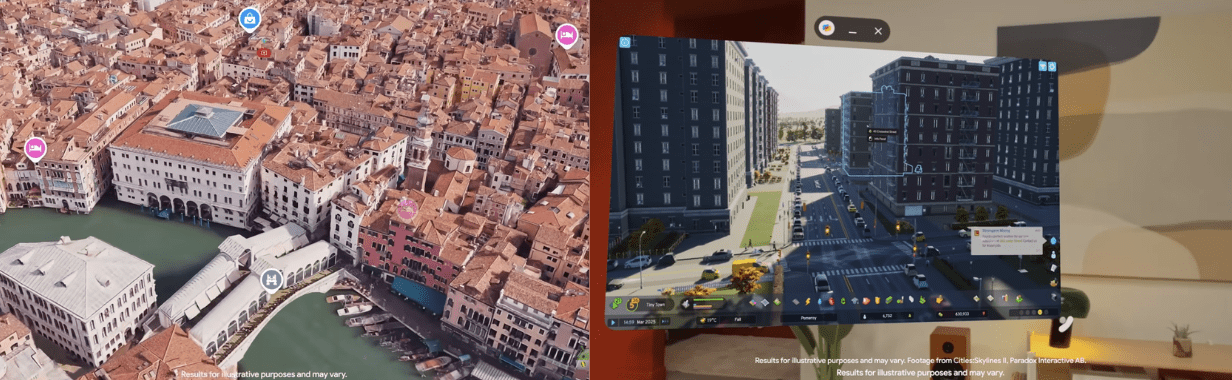

The official announcement of PC Connect allows Windows computers to connect to Galaxy XR, bringing the desktop or any window into the XR interface to be used alongside Google Play native apps. This feature allows users to work on a massive virtual screen or even play PC games, providing operational space far exceeding that of a laptop screen. PC Connect starts rolling out in beta form today.

PC Connect allows the use of Google Maps and gaming on the device, simulating an immersive experience. Travel mode: stable use of XR on airplanes.

PC Connect allows the use of Google Maps and gaming on the device, simulating an immersive experience. Travel mode: stable use of XR on airplanes.

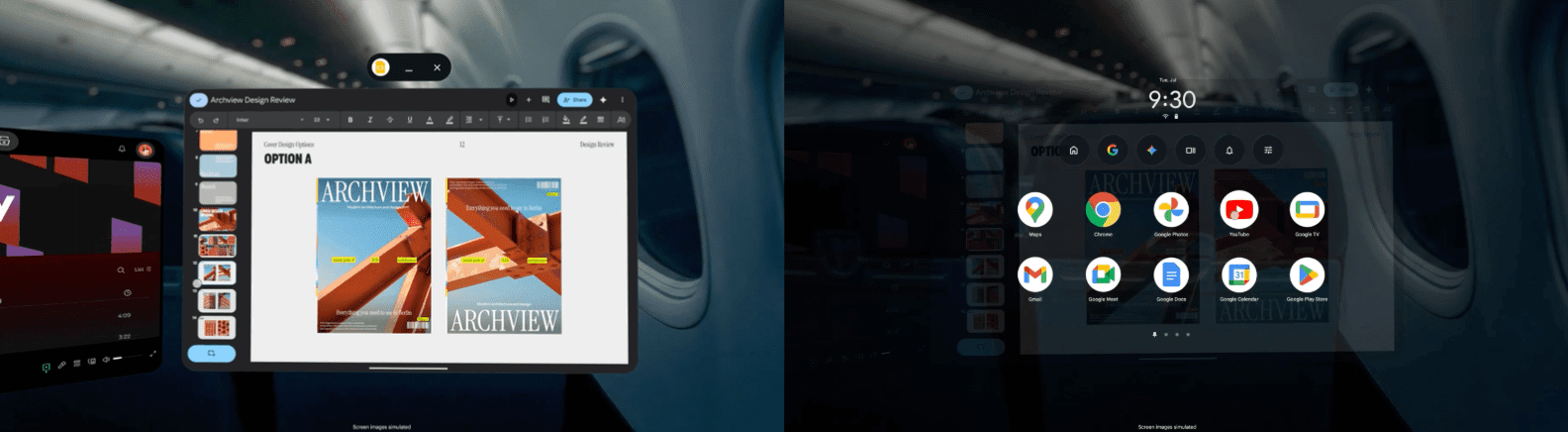

To enable XR devices to be used in more scenarios, Google introduced travel mode, which maintains stable visibility in narrow environments like airplanes, creating a personal cinema or immersive workspace that does not shake even when the body is in motion.

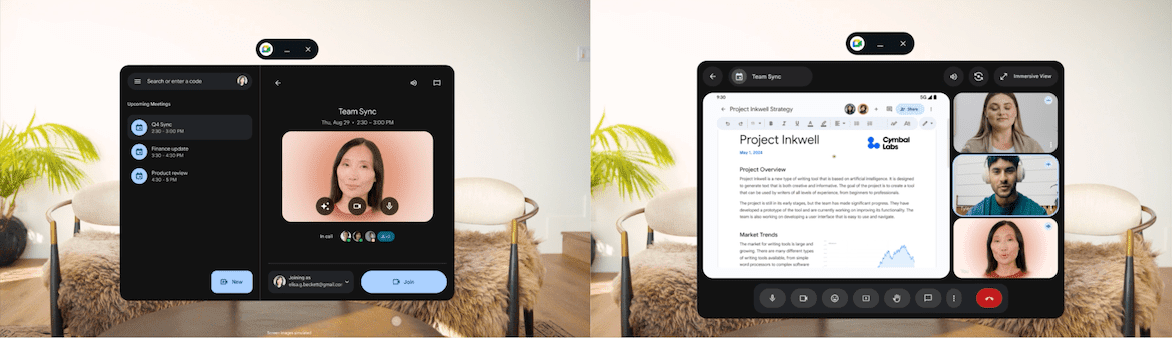

Likeness: Create a personal digital avatar capable of real-time expression synchronization.

Galaxy XR adds Likeness feature, allowing users to create a personal digital avatar using their facial profile, which can be presented in video calls in real-time.

Google stated that this allows users to wear the device while still "letting the other party see their real expressions," enhancing communication naturalness. Likeness enters beta 1 today, with official operation instructions provided simultaneously.

Building an XR device ecosystem: AI glasses and new type of XR glasses are unveiled for the first time.

Google pointed out that the hardware form of XR and AI will not be limited to a single form, so the Android XR platform will support a wider variety of wearable devices, providing users with choices based on their needs for the most suitable weight, shape, and level of immersion.

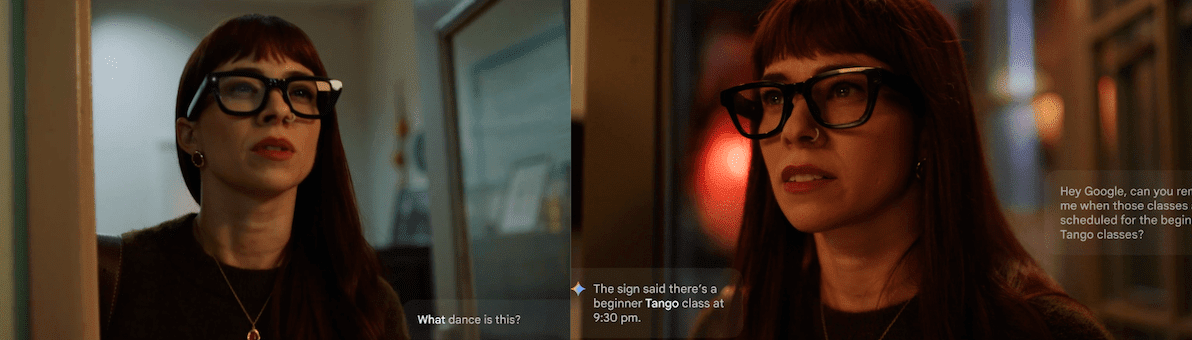

Two types of AI glasses: screenless voice assistant and information glasses with built-in display.

Google announced a collaboration with Samsung, Gentle Monster, and Warby Parker to create two types of AI glasses:

Screenless AI glasses: built-in speakers, microphone, camera, can converse with Gemini, take photos, and provide assistance, focusing on lightweight, all-day wearable AI devices.

Built-in display AI glasses: lenses contain displays to privately present information, showing navigation, translation subtitles, and other real-time content.

Google stated that the first batch of AI glasses will be launched next year.

Project Aura: XREAL's first Android XR "wired XR glasses" revealed.

Android XR will also support wired XR glasses, combining the immersive experience of head-mounted devices with the lightweight design of regular glasses.

Google publicly unveiled XREAL's Project Aura at the event for the first time, featuring a 70-degree field of view and optical see-through technology that can overlay digital content directly onto the real world, allowing multiple windows to be placed simultaneously, such as following floating video tutorials while cooking or displaying steps during appliance repairs.

Google stated that more details will be announced next year, and Project Aura will also officially debut next year.

Developer tools update: Android XR SDK Developer Preview 3 released.

Google pointed out that hardware is just a "canvas," and the real experience still requires developers to co-create, thus simultaneously launching Android XR SDK Developer Preview 3, allowing developers to officially create apps for AI glasses.

This update opens up AI glasses development tools and APIs for the first time, allowing developers to create practical augmented experiences similar to Uber and GetYourGuide. At the same time, Google also provides more APIs for XR head-mounted devices and wired XR glasses, facilitating the creation of richer and more immersive interactive content.

Developers can obtain tutorials through the Android Developer Blog, while general users can join Google's notification list to stay informed about new product launches.

This article Google Galaxy XR ecosystem debuts: device updates, AI glasses, and Project Aura all in one. First appeared in Chain News ABMedia.