In the next two to three years, 90% of new knowledge globally will be synthesized by AI—this statement by NVIDIA CEO Jensen Huang has recently caused a stir in the tech community. This 'leather jacket godfather' stated in the program that generative AI is reaching a turning point, with a speed that is hard to keep up with.

How is AI-synthesized knowledge different from textbooks?

Jensen Huang said that this is essentially no different from learning knowledge from textbooks written by strangers. The key is not who generated it, but whether the content is reliable. Just as students do not blindly trust textbooks, we also need to fact-check and verify the principles of AI outputs. The source is no longer the sole standard for judging authenticity; critical thinking is key.

He gave an example: when students learn, they check information and ask teachers to verify textbook content, and using AI to obtain knowledge should be the same. Whether it's human experts or AI models, as long as the output information is logically coherent and fact-based, it can become material for learning.

Tools have become smarter, and the barriers have disappeared.

ChatGPT has a unique ability: you don't have to know how to use it, just ask it directly. This is something no tool in history has offered. It can also speak multiple languages and even quickly learn new languages to continue the conversation. Jensen Huang believes this will greatly narrow the technology gap.

In the future, ordinary people won't need to learn programming languages like Python or C++; they will be able to collaborate efficiently with computers using natural language. For instance, if they want to do data analysis, they won't need to write code, but can directly say, 'Help me analyze this month's sales data and generate charts,' and AI will be able to complete it.

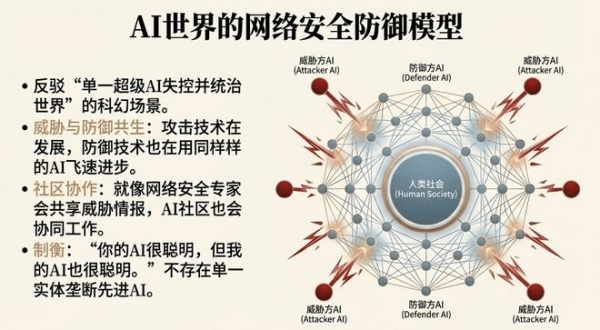

Who will pay for the risks behind the opportunities?

The popularization of AI-generated knowledge has also brought new problems. Misinformation and biases may spread faster. Jensen Huang emphasizes that users need to fact-check, and companies and developers must take on governance responsibilities.

For example, stricter data cleaning, model alignment, and user education. Technology is not omnipotent; supervision and governance are still important. Just like social media needs content moderation, AI-generated knowledge also requires 'gatekeepers.'

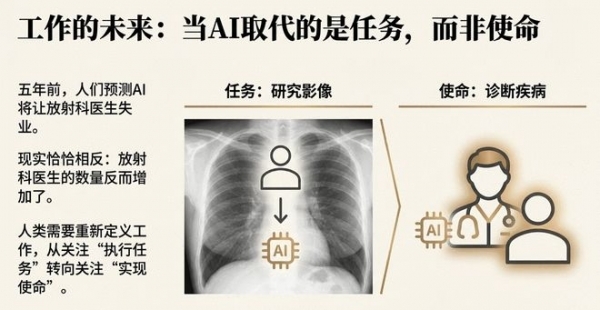

Education and work will change.

This wave of transformation will reshape the way we learn and work. Education will shift from 'teaching how to write code' to 'teaching how to use AI to solve problems.' Students need to learn how to ask effective questions and how to verify AI's answers, rather than just memorizing programming syntax.

In companies, the focus of technical personnel will shift to problem modeling and result verification, instead of writing every line of code by hand. Ordinary people can also participate in technology applications, which is part of the democratization of tools, but we also need to learn to distinguish authority and ensure information reliability.

Jensen Huang's prophecy is not to make us panic, but to remind us to be prepared. In the era of AI-generated knowledge, what we need is the courage to embrace change and the wisdom to remain rational. After all, no matter how powerful the tools are, they ultimately serve people.